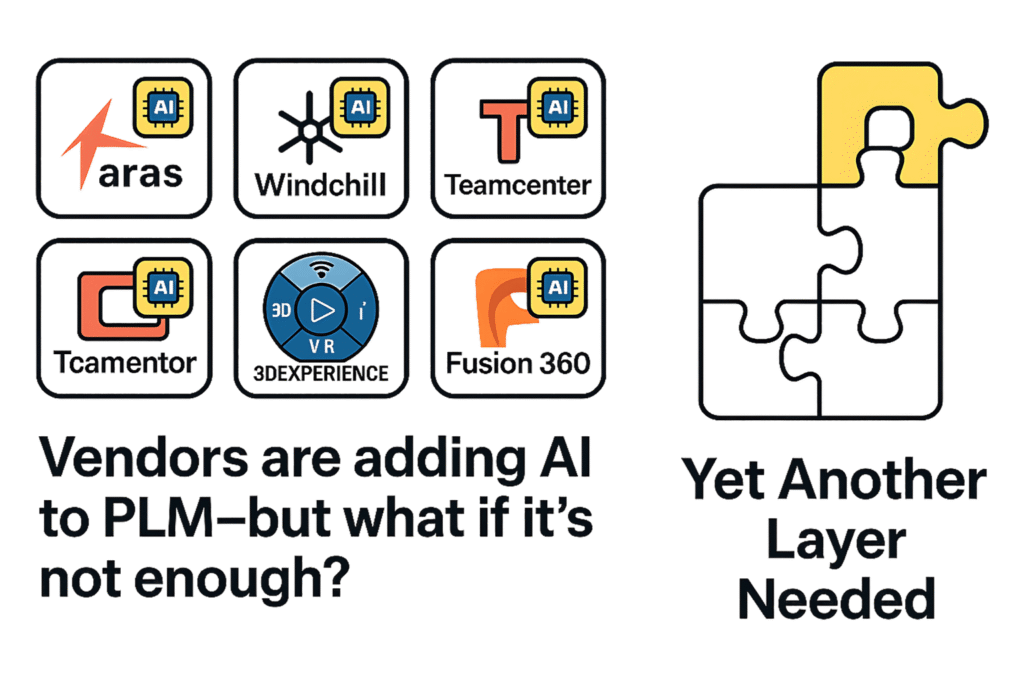

Today, many PLM software vendors are adding AI features to their platforms. While functions like part search, document summarization, or metadata classification are useful, they often fall short of meeting the needs of engineering teams. For more complex scenarios such as real-time BOM comparison, CAD–ERP–MES integration, or IP risk analysis, an additional layer is required.

This is where the concept of a “PLM Agent” comes in. In this blog post, we explore what PLM agents are, how they work, the key components you’ll need, as well as security considerations and practical use cases.

Vendors are adding AI to PLM—but what if it’s not enough?

SaaS PLM platforms like Aras Innovator SaaS, Windchill+, Teamcenter X, 3DEXPERIENCE, and Fusion 360 Manage are embedding AI for part search, documentation summarization, or metadata classification. Useful—but limited.

Going Beyond Vendor AI in PLM

Going Beyond Vendor AI in PLM

If you need:

- Real-time BOM comparison,

- Cross-system reasoning (CAD + ERP + MES),

- IP-sensitive deployment,

- Or a domain-tuned AI assistant for your products…

…you’ll need to go beyond what’s on the vendor roadmap.

What’s a PLM Agent?

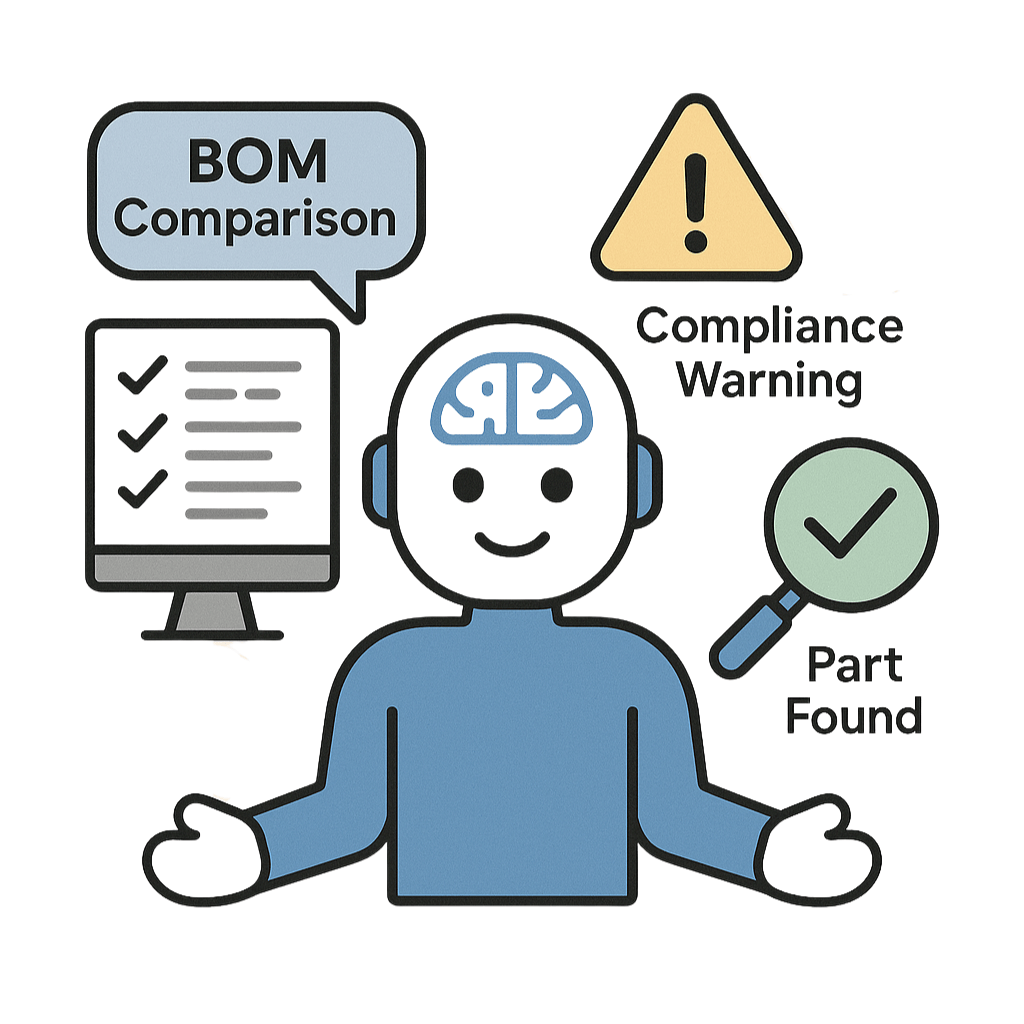

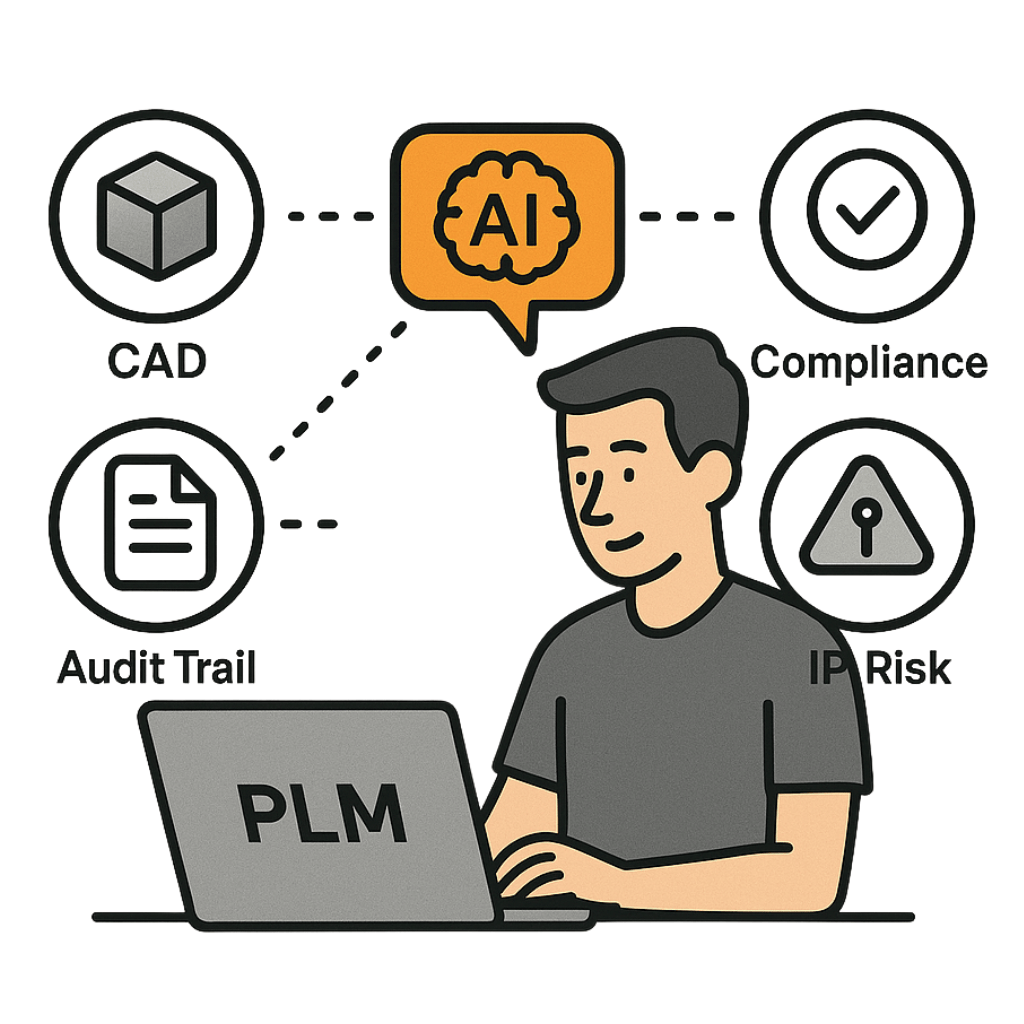

A PLM agent is a custom AI assistant that interprets, reasons over, and enhances your product data. Think of it as an internal LLM-powered tool that can:

PLM Agents: Smarter Data, Better Decisions

- Explain BOM differences across versions

- Assess change impacts

- Flag compliance gaps

- Help engineers find reusable parts

- Detect IP risk zones (ITAR, REACH, RoHS)

And unlike vendor-embedded tools, you control the model, the data, and the deployment.

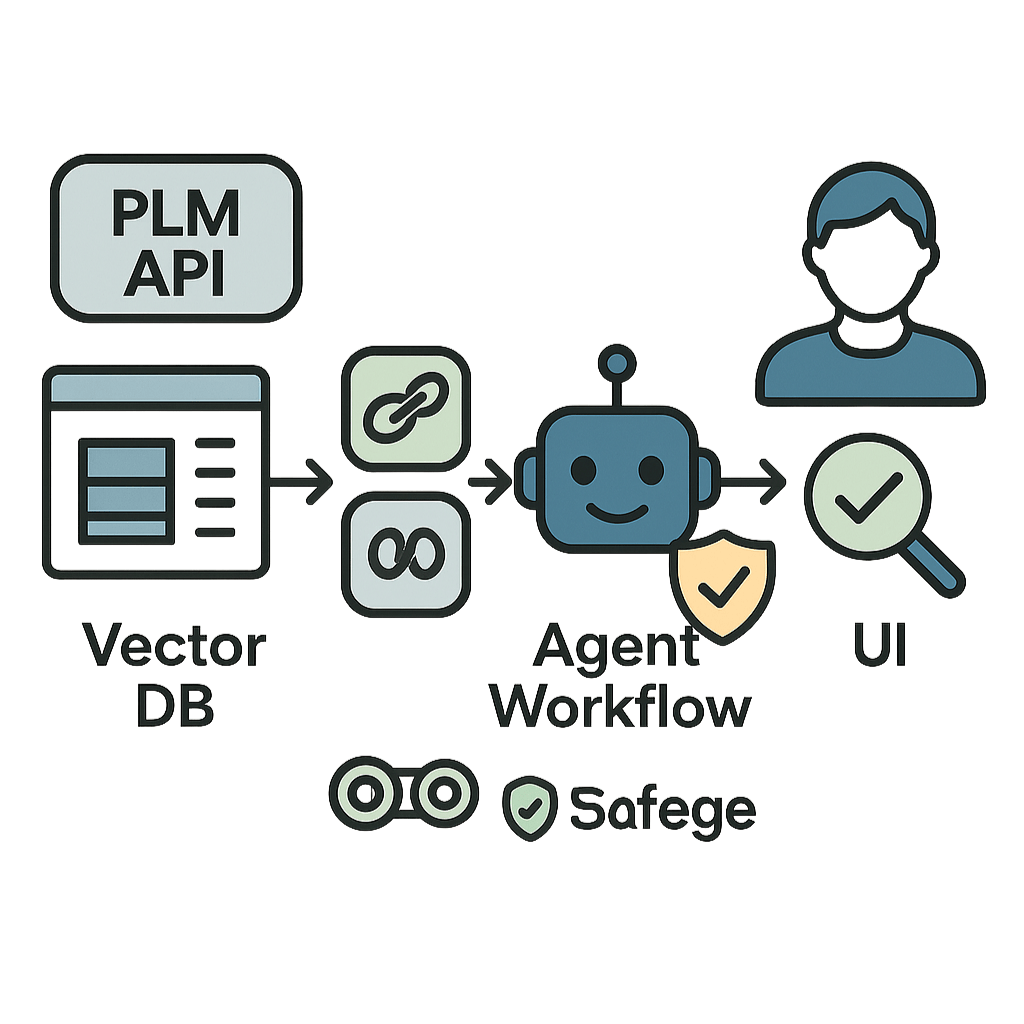

How It Works (Light Version)

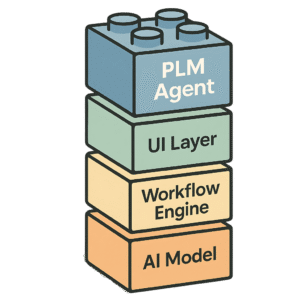

You don’t need to train your own model. You do need to wrap an existing LLM (e.g., LM Studio, Ollama, OpenLLM) inside a smart workflow using frameworks like LangGraph or LangChain.

PLM Agent Workflow Overview

A typical pipeline includes:

- Extracting data from your PLM (via API or snapshot)

- Indexing it with a vector DB like Chroma or Weaviate

- Embedding it in an agent workflow

- Wrapping it in a secure UI (Streamlit, Slack, even PLM iframe)

- Enforcing tight access controls and logging

Result: An AI agent that understands your schema, speaks your part taxonomy, and works within your walls.

What You’ll Need

Component Tool Examples:

- AI Model: LM Studio, Ollama, GPT-4 on-prem

- Workflow Engine: LangGraph, LangChain, n8n

- Data Layer: API, Webhooks, ETL scripts

- Vector DB: Chroma, Qdrant, Weaviate, Supabase

- UI Layer: Slackbot, Streamlit, PLM embed

Your Toolkit for an AI-Powered PLM Agent

Effort? Expect 4–6 weeks for a first proof-of-concept—if your IT and data governance teams are onboard.

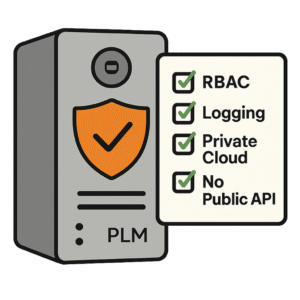

️ What About Security?

- ✅ Host everything in your own cloud or VM

- ✅ Read-only access to PLM during testing

- ✅ Role-based access control (RBAC)

- ✅ Log every prompt, access, and result

- ✅ Never send IP to public APIs

Protect Your IP When Using PLM AI

This is especially critical for sectors like aerospace, medtech, or defense.

Key Use Cases

- ECR triage & summarization

- CAD file classification

- REACH/RoHS supplier compliance checks

- Audit trail analysis

- NPI process guidance

- IP risk detection (ITAR, EAR)

Critical Use Cases for PLM AI

Not to replace humans—just to boost speed, trust, and traceability.

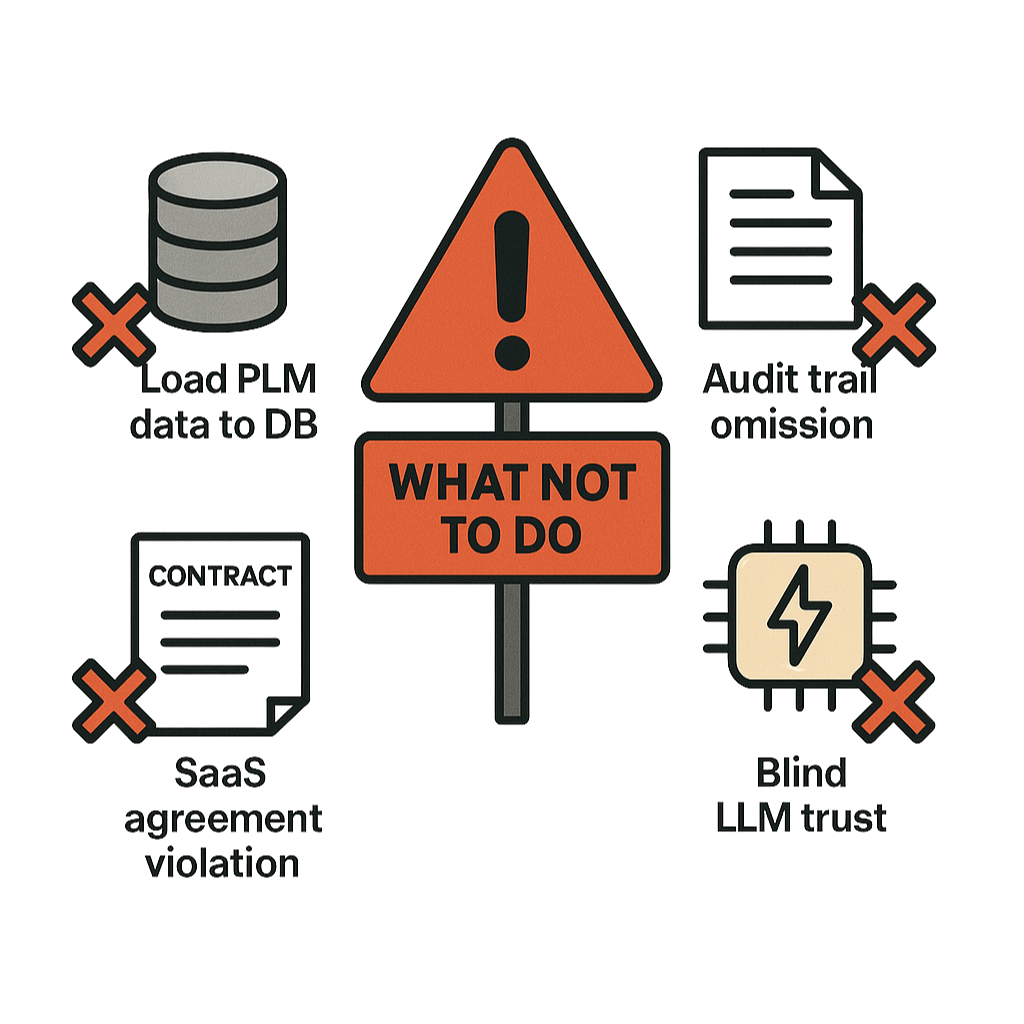

What Not To Do

- ❌ Don’t load your entire PLM into a vector DB.

- ❌ Don’t skip audit trails or RBAC.

- ❌ Don’t assume your SaaS PLM contract permits bulk API use.

- ❌ Don’t blindly trust the LLM—always validate critical outputs.

PLM AI Pitfalls to Avoid

Conclusion

PLM agents won’t replace your PLM—but they’ll make it smarter, faster, and yours.

If your engineering workflows need more than “AI search,” and your compliance team needs more than “chat summarization,” it’s time to explore your own AI layer.

- BREAKING PLM NEWS: AI, Sovereignty & Europe’s PLM Wake-Up Call - 16 October 2025

- Build Your Own PLM Agent Before the Vendor Does - 12 September 2025